Like many people who have been studying artificial intelligence, I was interested to try out the new OpenAI-based ChatGPT interface. Early reports suggest it is good at telling stories, and perhaps plays a little fast and loose with facts. The promise of a general AI bot is captivating. The power of the entire Internet to provide information, natural language processing to figure out what you're looking for and how to respond in a way that's useful, and of course the ability for computers to do what they were originally designed to do: compute. It will make suggestions based on thousands of calculations, all in a few seconds. What if its calculations are wrong?

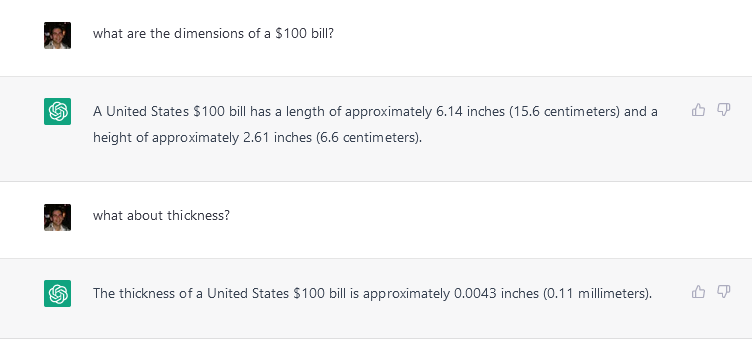

I ran a test today that I expected ChatGPT to ace. It was simple: how much money can you get in a standard briefcase? I knew it would have to do some searching to find the dimensions of bills, the dimensions of briefcases, and do a little math. This could be extended to see how much money could fit in a swimming pool, etc. Except we didn't get that far, because the bot revealed a huge problem with math. Here's how I set it up:

So far, so good. But then it went off the rails:

Now, this all seems sort of reasonable. Its assumptions about the briefcase look right. The formulas look right. But the final answer didn't smell right at all. I was expecting the briefcase to hold a little more than $1 million. This was off by a factor of 100. Maybe because it got confused about the $100 bill? But it calculated that it would hold a million bills!

I pulled out my calculator and revisited the math it said it was doing and expected to see the same results. But I didn't. Namely, 6.14 x 2.61 x 0.0043 is actually 0.0689, which is not even close to 0.000716. What?

That didn't help at all. Why would it try a cubic feet conversion?

I believe here it was using its previous chat entry as fact, and simply regurgitated it in a different way.

But fundamentally, it was still coming up with the wrong product in a simple multiplication exercise. You're gonna drive my car in the future?

Interestingly, it got a different answer that was a lot closer. But still wrong. How was this possible? The following response showed it was definitely responding to my follow-up by using what it thinks is a fact that was previously established as part of our conversation. Of course, it was wrong. So the bot can simply be trained to repeat bad information that it invents itself. However, I was finally able to get it to admit to a calculation error by telling it the right answer:

Does it really believe this, or is it relying on me to establish the fact? I tried to get it to explain how it made a mistake. I hypothesized that because the number was close, it found a different calculation for cubic inches of a dollar bill and used that instead of calculating based upon the dimensions stated.

Now here's where I made a mistake. When ChatGPT responds, you can watch it quickly "write" each response like it's a typewriter, quickly creating a line at a time. This time, it started writing very, very slowly and got half of a response where it blamed the wrong answer on an incorrect conversion to millimeters. This could not have been true at all. It was trying to make up an answer. Then it stalled and got stuck. Does it take more computing power to lie? Unfortunately, I neglected to get a screenshot, and in refreshing the chat and trying again, I got a completely different answer.

None of this makes sense to me. If you plug the right numbers into the right equation, why would a computer ever get the wrong answer?

Note the contradiction. I called it out.

Of course, I can't imagine it's actually making "transcription errors." More likely, it has learned that math mistakes can often be made that way and floated it as a possible solution. But the other problem is it's getting another wrong answer.

Wait, what? Now it's become completely unmoored. Finally, I gave it a hint to get me the right answer:

We are rushing headlong into disaster. When we talk about using AI to drive our cars and prescribe the correct medications, we assume that as part of its "intelligence" that it will follow our rules. What happens when it ignores them because it thinks it knows better? Rules for multiplication have been known for millennia. Yet this example of our best artificial intelligence completely ignores them. This test was easily verifiable. Most AI applications are not. Yet today, millions of people are ready to base important decisions on this output. It's not ready. Neither are we.

Comments